Advanced Micro Devices (AMD) delivered a strong “Advancing AI 2024” event. While the event conveyed an exceptional AI story, there were a few minor negatives that likely soured investor perceptions and caused the stock to dip during the event. They are:

AMD CEO Lisa Su has talked about several new hyperscalers coming on board with MI300X during the Q2 earnings call, but no new hyperscale customer announcements were made at the event.

The new flagship accelerator product MI325 was announced as expected but the general availability was set for Q1. While AMD is likely to recognize a small amount of MI325 revenues in Q4, the Q1 general availability announcement was likely seen as a small negative.

And, finally, Lisa Su presentation style was well short of exciting and likely disappointed investors.

Aside from these minor disappointments, the presentation was extremely strong.

To start off, Lisa Su updated the AI TAM forecast from $400B in 2027 to $500B in 2028. On the face of it, a 25% growth from 2027 to 2028 on a staggering $400B number should be seen as a big positive since AMD is likely relaying the sum of the opportunity that AMD is seeing from its customers. Note that Lisa Su set the tone on the AI TAM last year and since then the number has been widely adopted by other players. Such is likely to be the case once again as other CEOs and market researchers adopt the new larger 2028 number. As analysts extend their models to outer years, AMD’s TAM upgrade could form the beginning of another bull run in semiconductors and hyperscalers (along with other elements of supply chain).

EPYC Seems Set To Explode

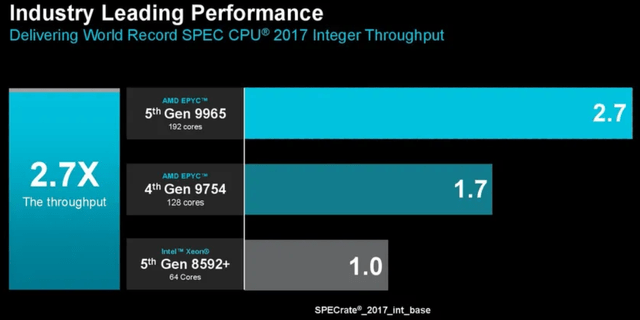

AMD started its product pitch with CPUs. While the presentation itself was dull, Zen 5 has lived up to the billing and is delivering exceptional performance for servers (image below).

Note the strong IPC uplifts which are significantly higher than what we saw on the Ryzen client side a few weeks back. As previously discussed at BTH, AMD chose to spend the incremental silicon area on the client side on the NPU. While the NPU enables generative AI application on the client, it provides little benefit to traditional applications.

On the server side, AMD chose to use the N3 and N4 goodness combined with the new architecture, to help increase legacy application performance along with AI application performance. This has helped AMD retain a very strong performance lead over Intel (INTC) (images below).

Note that AI support has been one of Xeon’s strengths and has been one of the few bright spots for Intel in recent years. AMD has considerably strengthened Zen 5 CPU architecture to better position against Intel in AI applications (image below).

Many low intensity AI applications will be run on CPUs with good inference performance making CPUs a lot more attractive to deploy in this age of AI. EPYC Zen 5 AI performance advantage over Xeons should help AMD further increase its CPU share.

As has been widely discussed in the past at BTH, Nvidia (NVDA), for strategic reasons, chose to use Intel Xeons as x86 CPU for all its reference designs for Hopper and Blackwell. As such, these reference designs are now the single largest driver of Intel Xeon design wins and AI revenues.

AMD is now going on the offensive in trying to win x86 design wins with Hopper and Blackwell. AMD shared how much incremental AI performance can be had by going with AMD EPYC CPUs instead of Xeons as recommended by Nvidia (image below).

While one should take any competitive benchmarking claims with a pinch of salt, the numbers that AMD shared are eye popping. Considering that GPU investments are costing companies hundreds of thousands to millions to billions of dollars, a double-digit performance advantage can translate to huge economic returns. The size of gains is likely to make customers start looking to replace Xeon designs with EPYC.

Investors may be skeptical if AMD will gain much share given Nvidia’s market power. After all, why should Nvidia not continue to push hard Xeon as it has been doing so far?

Investors should note that there is an important and critical factor that is starting to play in AMD’s favor. Previously, there was no real competition to Nvidia in the GPU world, but increasingly AMD is now a legitimate competitor. By making Xeon the reference head-end CPU, Nvidia is leaving a lot of system level performance at the table leaving it at a competitive disadvantage to AMD’s CPU+GPU alternatives.

AMD also provided information on how much its own GPUs can gain by customers switching from Xeon to EPYC for the headend of the AI server (image below).

We can increasingly expect that both AMD and Nvidia GPUs will be paired with EPYC CPUs. EPYC’s competitive advantage is likely to continue to drive AMD’s server market share (image below).

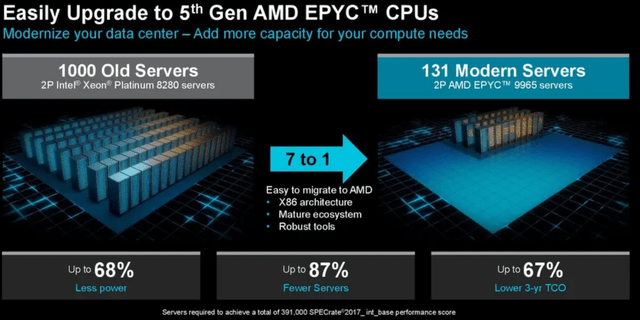

AMD argues that one EPYC server can replace 7 Xeon servers from 5 years back thus saving enormous amounts of space and power in the data center and dramatically reduce the TCO (image below).

AMD argues that the freed-up space and power can be used to add incremental EPYC CPU or Instinct GPU capacity (image below). Given the increasingly challenging data center capacity constraints, this pitch is likely to resonate well with many enterprises and CSPs. AMD will also see a dramatic market share rise if EPYC Zen 5 replaces the legacy server infrastructure.

Instinct Gaining Against Nvidia GPUs

Keep reading with a 7-day free trial

Subscribe to Beyond The Hype - Looking Past Management & Wall Street Hype to keep reading this post and get 7 days of free access to the full post archives.