Note: this article does not fundamentally change the thesis of any of the stocks discussed but assesses how 2024 developments impact the market in 2025 and takes a first look in to 2025 demand.

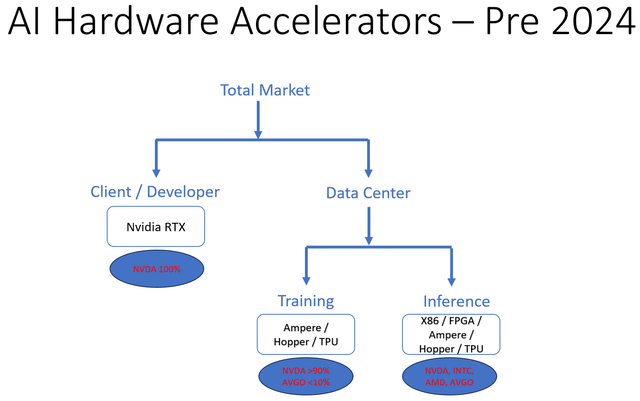

Pre-2023, the AI Accelerator market was ruled by Nvidia (NVDA) without almost any competition. When ChatGPT hit in late 2022 and led to a massive AI boom, Nvidia alone had an accelerator that could address LLM training and inference. We thus saw Nvidia deliver massive revenue and profits (approaching 80% overall gross margins) for the last several quarters. As can be seen from the image below the AI Accelerator landscape was a simple one prior to 2024.

Note from the image that at the client end there was no choice but Nvidia. Developers developing on the Nvidia platform naturally deployed production systems on Nvidia hardware. At the data center level, hyperscalers were experimenting with various alternatives – CPUs, GPUs, FPGAs, and ASICs. Of these, only Alphabet (GOOG) (GOOGL) used its TPU, made by Broadcom (AVGO), for training. Other custom and semi-custom silicon ventures from Amazon (AMZN), Meta (META), and Microsoft (MSFT) did not scale to meaningful volumes. In these early stages of AI, inference was a smaller business than training and was done on x86 CPUs – mostly Intel (INTC) Xeon but also Advanced Micro Devices (AMD) EPYC. What was not done on CPUs was done on Nvidia Ampere or Hopper GPUs and to a lesser extent on Xilinx and Altera FPGAs.

Competition is intensifying as we go through 2024 with AMD’s Instinct MI300 ramping and Broadcom’s “Customer 1” and “Customer 2” XPUs ramping. It is worth noting that while Broadcom’s current XPUs are somewhat dated, MI300 offers performance above Nvidia’s Hopper generation. Note that Nvidia’s H200 cannot really be counted as a next generation since it still uses the Hopper die and, despite gains in inference performance, is likely to continue to lag AMD’s MI300 generation.

2025 AI Accelerator Market Is Shaping To Be Highly Competitive ….

For the next generation of products, we already know much about Blackwell. Given Nvidia’s dominance, Blackwell will be the bassline that all others will be judged against. Nvidia guided that Blackwell production availability late in the year and Meta commented that it does not expect to see systems until very late this year or early next year. This schedule suggests that Nvidia will be kickstarting Blackwell wafers at TSMC (TSM) in Q2.

Broadcom’s closest competitive entry will be the XPU it expects to ramp for “Customer 3” this year (likely Microsoft/OpenAI; see the article “Broadcom Primed For AI Era With Standards” for detail). Given the long cycle times of this class of products (typically 6 to 8 months), chances are high that final wafer orders will hit TSMC (TSM) sometime in Q2. In terms of performance, we have no official data but given that the XPU Broadcom demonstrated at its AI presentation had 12 channels of HBM – 50% more than MI300 or Blackwell B200 – it is likely that Broadcom XPU will perform better than Nvidia’s Blackwell for some applications – most likely a few specific cases of LLM Inference. Broadcom did not give any visibility into what Google (“Customer 1”) and Facebook (“Customer 2”) plan to do in 2025. It is reasonable to assume that these customers also have plans for chips of complexity similar to “Customer 3”.

AMD MI300’s mid-life refresh, let us call it MI300+, cannot really be considered a next generation product but given that it is likely to have inference performance well above that of Nvidia H200 and likely approaching that of Blackwell B100, it is likely to be a significant player in 2025. This chip is likely currently being sampled to key customers and should be in production later this year.

Keep reading with a 7-day free trial

Subscribe to Beyond The Hype - Looking Past Management & Wall Street Hype to keep reading this post and get 7 days of free access to the full post archives.